Made with 🧀 in Madison

My only New Year’s resolution is to practice mindfulness every day. I have been a long-time subscriber to Headspace, but have had trouble making it a consistent habit. 🤞

ActivityPub is cool because we finally have an open protocol to post and subscribe and reply to content across apps.

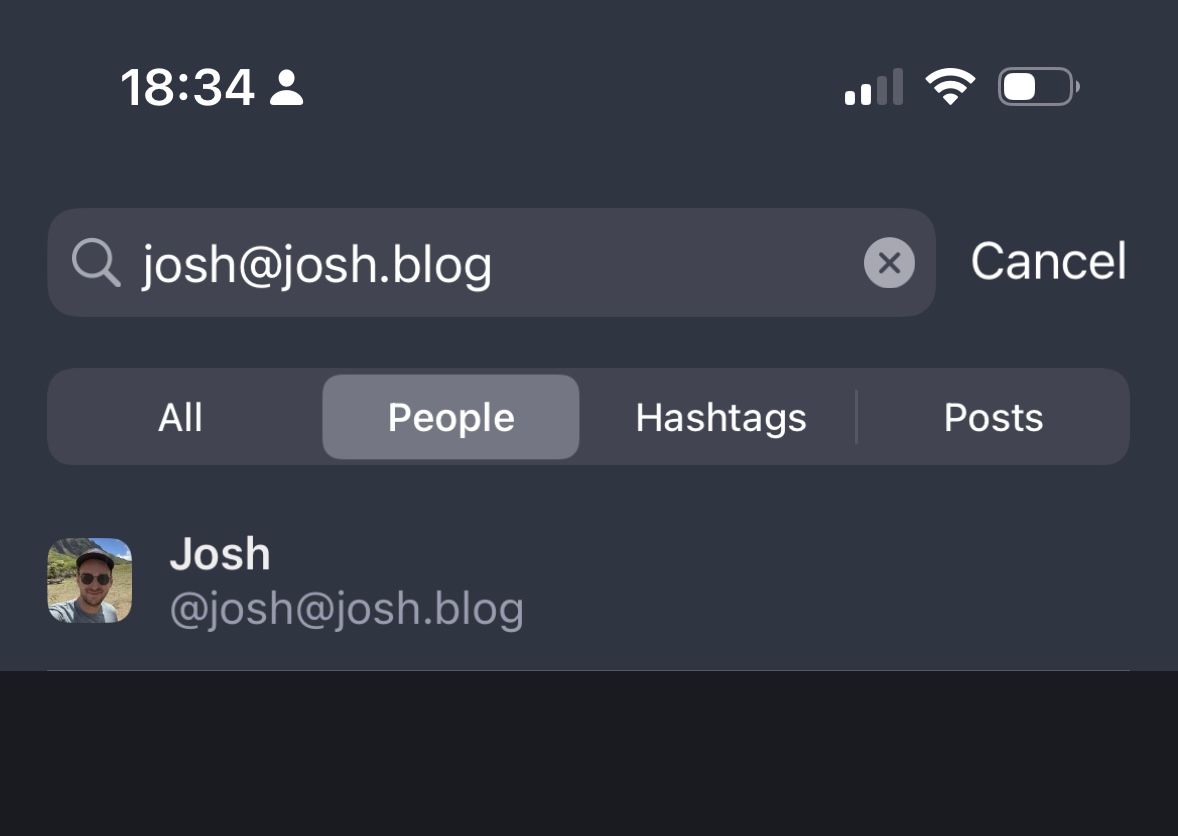

Mastodon is not Twitter. I get why people think of Mastodon as open source or distributed Twitter, but it’s more than that. If you add ActivityPub support to your WordPress site, others can see the comments and reply from Mastodon! I think this will get more obvious as time goes on and apps like Tumblr start to implement ActivityPub support as well.

I set up ActivityPub for WordPress. You can follow my blog on Mastodon at @josh.

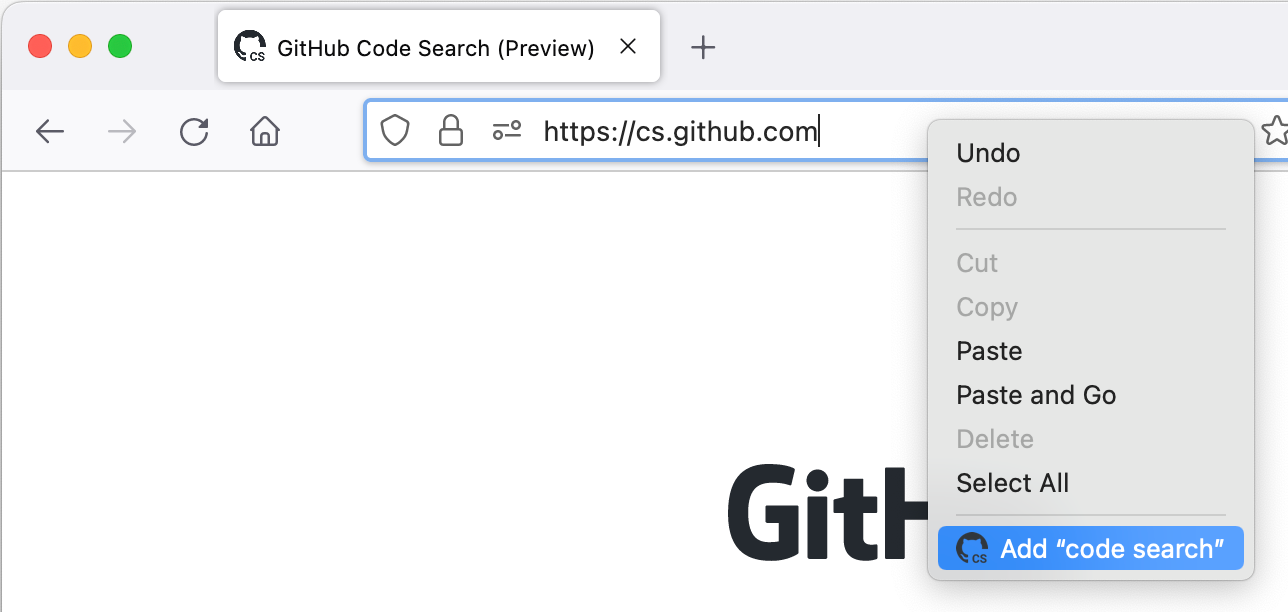

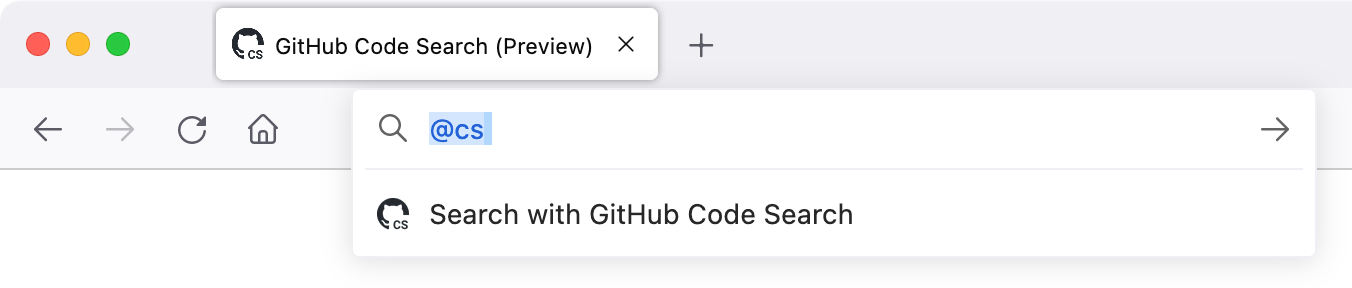

I recently started using the GitHub Code Search preview and noticed you can configure Firefox to search GitHub directly from the address bar.